Spark installation

Prerequisites

Installing Spark for Mesos is surprisingly simple, given that we already have configured following things:

- Mesos installed via Mesosphere (which includes OpenJDK 7) (check by accessing following URL: http://[master node]:8080)

- HDFS set up (you can check by accessing following URL: http://[namenode]:50900)

Steps

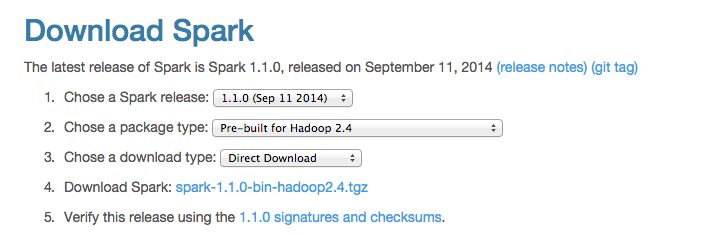

- Download Apache Spark and choose the correct Hadoop Version you are using

- Access your Namenode via ssh and

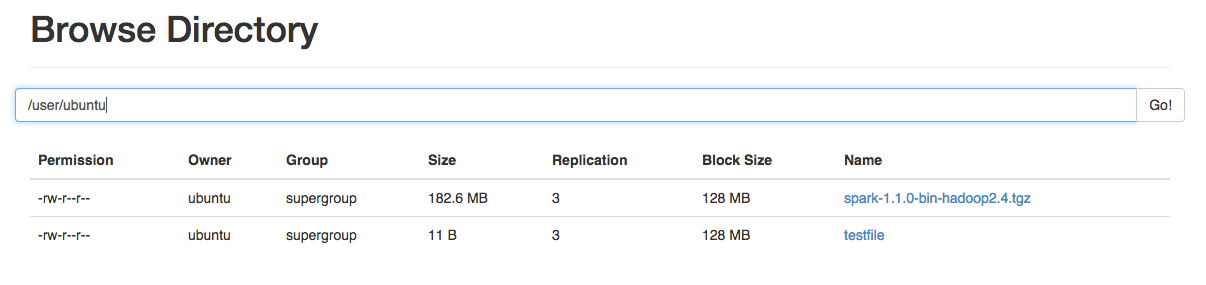

wgetthis version by copy paste the link address! - Upload the TAR File on HDFS

- Edit

spark-env.shby setting following variables:

export MESOS_NATIVE_LIBRARY=<path to libmesos.so> (default: /usr/local/lib/libmesos.so)

export SPARK_EXECUTOR_URI=<URL to your Spark HDFS Upload>

(We use 172.49.0.19:9000/user/ubuntu/spark)

- Now we can start Spark on Mesos with

./bin/spark-shell --master mesos://host:5050